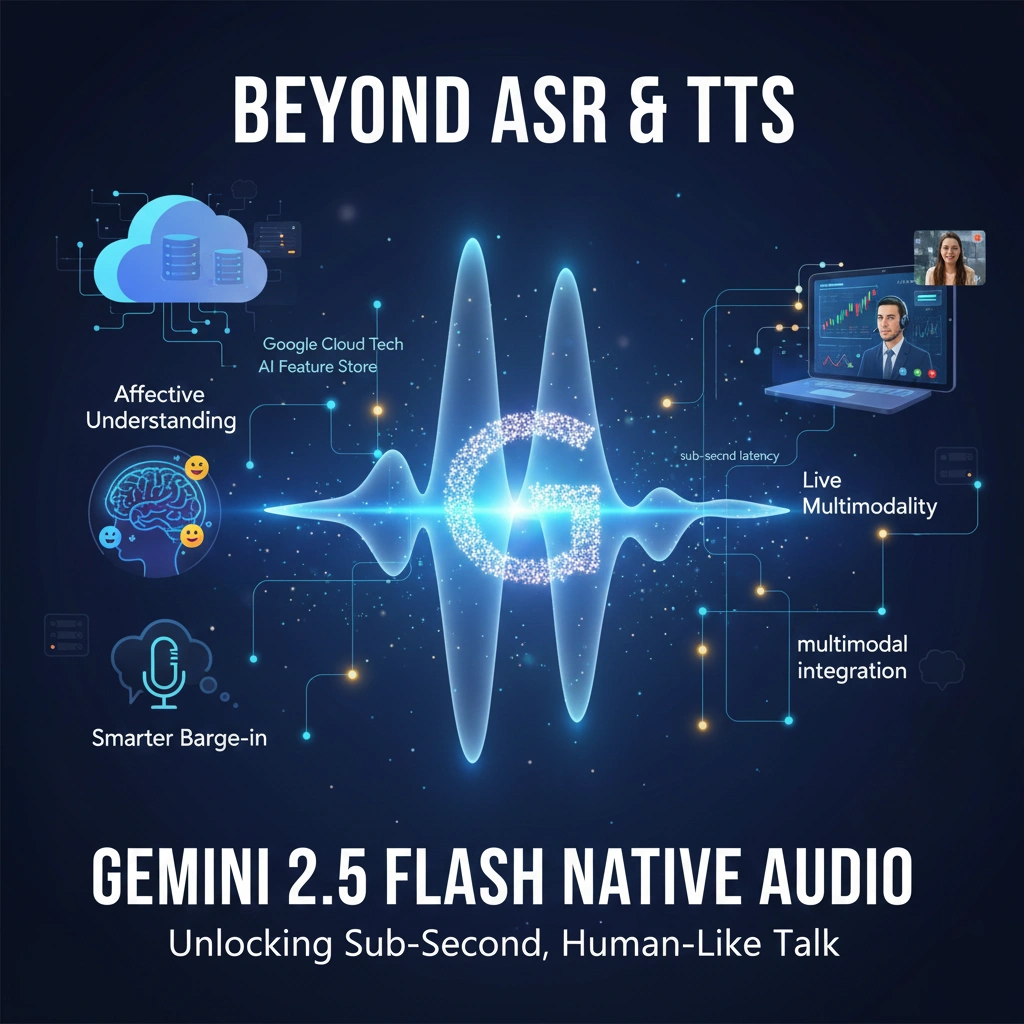

Beyond ASR & TTS: Gemini 2.5 Flash Native Audio Unlocks Sub-Second, Human-Like Talk

For years, the promise of truly fluid, human-like conversational AI has been hampered by a single, unavoidable culprit: latency. We’ve all experienced it—the awkward, tell-tale pause after we finish speaking, the mechanical voice synthesis on the other end, signaling that the AI is thinking, processing, and stitching together its response. This process, relying on the clunky sequential pipeline of Automatic Speech Recognition (ASR) feeding into an LLM, which then generates a response for a Text-to-Speech (TTS) engine, fundamentally bottlenecks real-time interaction. It’s a multi-step dance that breaks the illusion of natural conversation.

But that era is officially over.

The introduction of the Gemini 2.5 Flash Native Audio model and its companion, the Gemini Live API, marks a seismic shift in the world of conversational technology. Forget TTS and the sequential systems of the past. Gemini’s Native Audio doesn't listen via ASR; it processes the raw acoustic signal directly. This unified, low-latency architecture bypasses the old bottleneck, enabling interactions with sub-second latency that feel utterly, compellingly human. This is not just an incremental update; this is the real AI leap, fundamentally redefining how humans and machines communicate.

I. What is Gemini 2.5 Flash Native Audio?

Gemini 2.5 Flash Native Audio is Google's groundbreaking large language model (LLM) designed specifically for ultra-low-latency, human-like real-time voice interaction. It is not merely an updated text model; it represents a fundamental architectural shift in how AI processes speech.

1. Core Innovation: Native Audio Processing

Unlike previous conversational AI systems—which relied on a slow, three-step chain of ASR (Speech-to-Text) → LLM (Reasoning) → TTS (Text-to-Speech)—Native Audio is a unified, end-to-end model.

It achieves its breakthrough by:

- Processing Raw Audio Directly: The model is trained to analyze the raw acoustic signal itself, bypassing the need for intermediary text transcription (ASR) and text synthesis (TTS).

- Unifying the Pipeline: By integrating input processing, reasoning, and audio output generation into a single system, it eliminates the computational delays inherent in the multi-step chain.

2. Key Capabilities

- Sub-Second Latency: The primary benefit is achieving interaction speeds so fast (sub-second) that the conversation feels instantaneous and natural, eliminating the awkward pauses common in older AI.

- Affective Understanding: It captures the nuances, tone, and emotion (affect) within the user’s voice, allowing the AI to respond with appropriate empathy and context-awareness.

- Real-Time Fusion: When accessed via the Gemini Live API, it enables simultaneous processing of audio input alongside other modalities (like visual data), supporting truly holistic, multi-modal conversations.

In essence, Gemini 2.5 Flash Native Audio ends the era of "talking to a machine" and ushers in the age of natural, collaborative conversation with AI.

II. The Killer Features Enabled by the Gemini Live API

The raw processing power of the Native Audio model is made accessible to developers and enterprises through the Gemini Live API. This API is the gateway to unlocking capabilities that were previously confined to science fiction:

A. Real-Time Affective Understanding

The most impressive aspect of the native approach is its ability to understand affect. Because the model processes the raw acoustic data, it captures far more than just semantic meaning. It detects tone, emotional cadence, emphasis, and speaking pace. This allows the AI to react contextually: if a user sounds frustrated, the AI can adopt a calming, empathetic tone in its response, moving beyond mere instruction-following to true collaborative dialogue.

B. Smarter Barge-in and Listening

In natural human conversation, we often interrupt or complete each other's sentences. Old AI systems couldn't handle this; they only knew "silence" or "speech." The Native Audio model is different. It acts as a "co-listener," capable of intelligently anticipating when a user is pausing for thought versus when they have finished their statement, thanks to the continuous processing of the audio stream. This capability dramatically reduces unnecessary interruptions and makes the flow of conversation genuinely collaborative.

C. Live Multimodality

The power of the Gemini Live API extends beyond sound. It is built for fusion. Users can simultaneously speak (audio input) while showing the assistant a complex diagram or a live video feed (visual input). The API processes these different modalities concurrently, allowing the AI to integrate real-time visual context into its spoken reply, making it the most holistic and dynamic interaction tool available.

III. Deploying Native Audio with Google Cloud Tech

This technological leap is not just for consumer gadgets; it’s poised to transform enterprise applications globally.

For businesses looking to deploy these high-performance, low-latency solutions, the entire ecosystem is built upon robust Google Cloud tech. This seamless integration allows organizations to scale their real-time AI applications, from customer service chatbots to virtual assistants, with enterprise-grade reliability and security.

Deploying Context-Aware AI with Vertex AI

Building truly personalized and effective AI agents requires vast amounts of context. This is where MLOps tools become essential. To power bespoke experiences that leverage the Native Audio model’s affective understanding, developers must integrate external data sources efficiently.

- Keyword Integration: Services like the Vertex AI Feature Store are indispensable for managing, serving, and retrieving the vast contextual metadata needed to ensure that the Gemini 2.5 Flash Native Audio response is not only fast but also highly relevant and personalized.

IV. Impact and Market Reaction

The technological shift heralded by Native Audio is already generating massive buzz across the tech world.

The core promise—latency reduction—has captured the imagination of the global developer community. On platforms like Kaggle, where data scientists routinely explore groundbreaking techniques in machine learning and audio processing, the architecture of the Native Audio model is being closely analyzed for its potential to solve complex real-time audio challenges. This widespread scrutiny confirms that Gemini 2.5 Flash Native Audio is viewed not just as a product update, but as a foundational change in the way we approach audio-based AI.

V. Conclusion

The question is no longer if you will adopt Native Audio, but when you will realize your competition already has. This seismic shift demands immediate attention from every developer and enterprise leader. We are past incremental improvement; this is a foundation change. Don't be left analyzing the future from the sidelines!