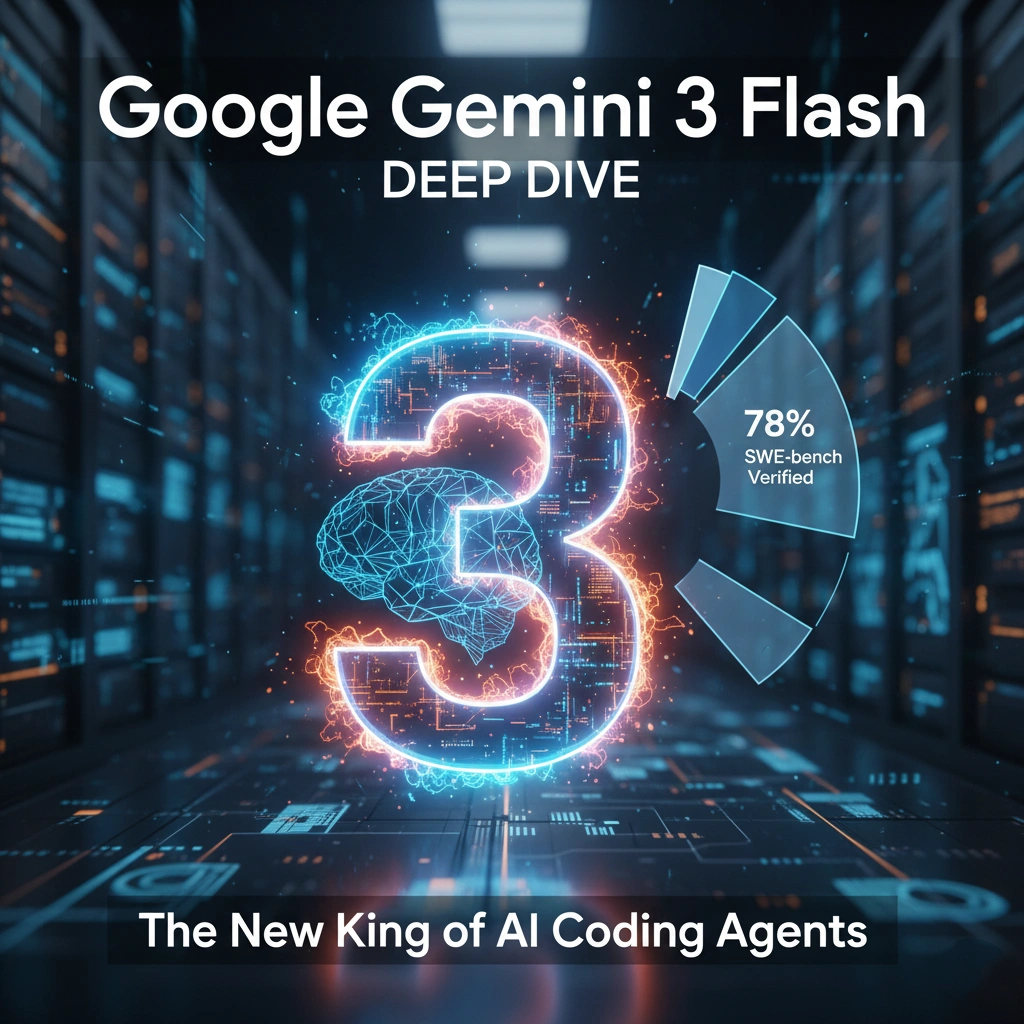

Google Gemini 3 Flash Deep Dive: The New King of AI Coding Agents

In the rapidly evolving landscape of Large Language Models, the "Flash" variant has traditionally played the role of the fast but lightweight sibling. However, with the official release of gemini-3-flash-preview, Google has effectively flipped the script.

If you’ve been following the discussions on gemini 3 flash reddit threads, you know the hype is real. This isn't just a minor iteration of the gemini-3-flash architecture; it’s a complete redefinition of what a high-speed AI model can achieve.

1. The 78% Milestone: A New Benchmark for Coding

The headline-grabbing feature of the latest gemini 3 flash benchmark suite is undoubtedly its performance on SWE-bench Verified. For the uninitiated, SWE-bench isn't a simple multiple-choice test; it’s a grueling evaluation where an AI must resolve real-world GitHub issues within massive, complex codebases.

Gemini 3 Flash achieved a staggering 78% success rate, a score that has sent shockwaves through the developer community. To put this in perspective:

- The "Student" Becomes the "Master": It outperforms the previous gemini3 flash (v2.5) by a massive margin.

- Beating the Flagship: Surprisingly, it even edges out Gemini 3 Pro (76.2%) in this specific agentic coding task.

- Unmatched Efficiency: It achieves these results while maintaining the low latency and cost-effectiveness the Flash series is known for.

2. Why Developers are Switching to Gemini-3-Flash-Preview

Beyond the raw numbers, the practical application of gemini 3 flash as an autonomous coding agent is where it truly shines. Thanks to its 1-million-token context window and the new "Thinking" mode, it can maintain stateful reasoning across thousands of files without losing track of the logic.

As many early adopters on gemini 3 flash reddit have noted, the model feels remarkably "decisive." Unlike previous versions that might hallucinate when faced with deep directory structures, the gemini-3-flash-preview displays a level of spatial and logical reasoning previously reserved for PhD-level researchers.

Key Performance Gains: Speed: 3x faster than Gemini 2.5 Pro.

Cost: Less than 1/4 the cost of the Pro tier.

Reasoning: Hits 90.4% on GPQA Diamond, proving that "Flash" no longer means "Simple."

Key Performance Gains:

- Speed: 3x faster than Gemini 2.5 Pro.

- Cost: Less than 1/4 the cost of the Pro tier.

- Reasoning: Hits 90.4% on GPQA Diamond, proving that "Flash" no longer means "Simple."

3. Conclusion

Whether you are calling the gemini-3-flash API for high-frequency enterprise workflows or using it as your daily driver in a coding IDE, the conclusion is clear: the Pareto frontier of AI intelligence vs. cost has been moved.

Google’s latest gemini 3 flash benchmark results aren't just numbers on a chart—they represent the dawn of the Autonomous AI Coding Agent era. If you haven't switched your production keys to the gemini-3-flash-preview yet, you might already be playing catch-up.

What do you think? Does the 78% SWE-bench score change how you view "Flash" models?