Qwen-Image-Layered Model Explained: The Future of Layered Image Editing

Qwen has just released its latest globally buzz-worthy image model — Qwen-Image-Layered. This article explains what Qwen-Image-Layered represents, where it stands today, how you can benefit from the layered-editing mindset before it becomes fully commercial, and how SuperMaker helps you apply those workflows right now.

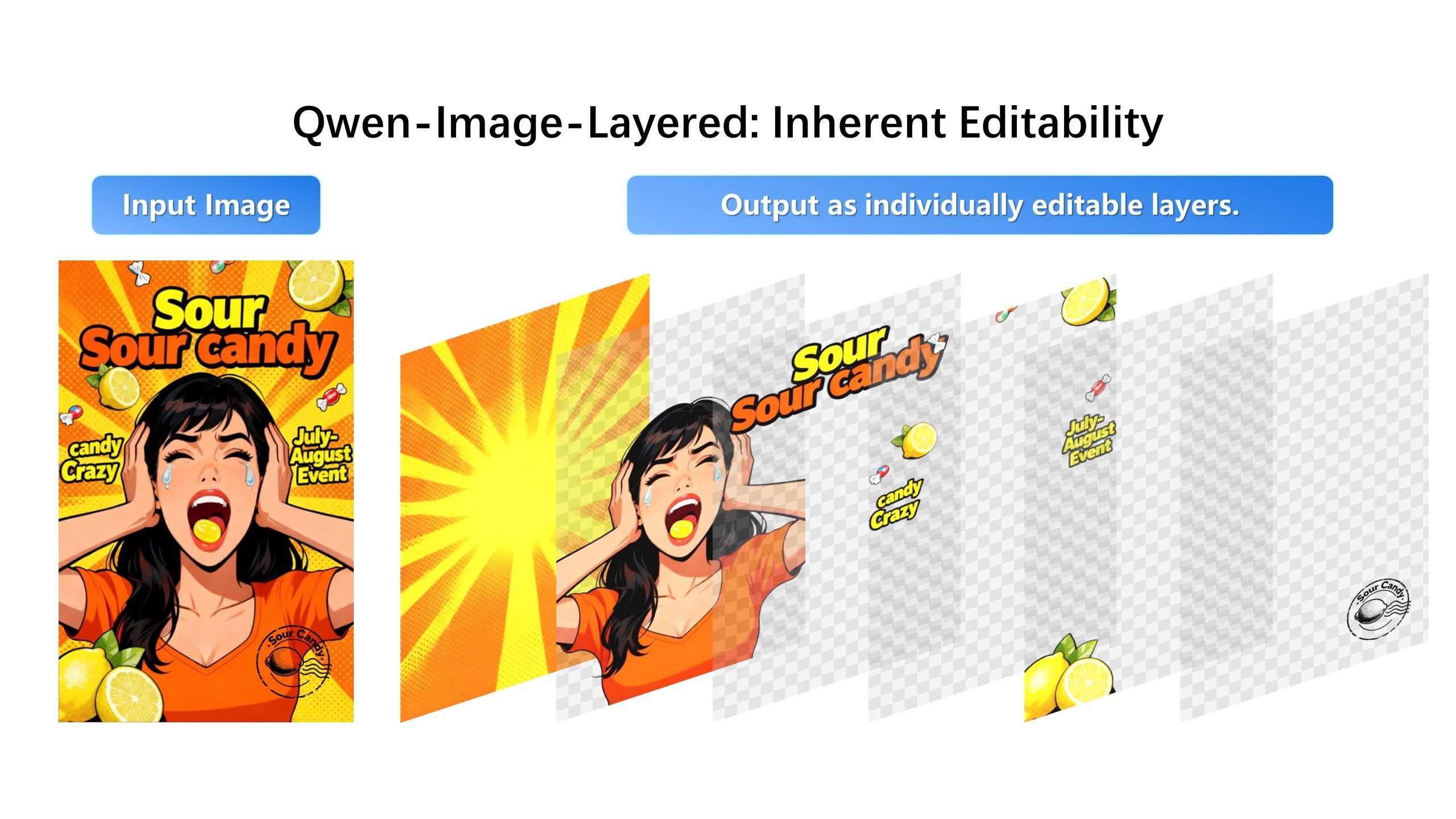

Artificial intelligence has never been short on breakthroughs, but every now and then, a development appears that feels like a genuine shift in creative infrastructure. The newly released Qwen-Image-Layered model is one of those moments. Rather than promising slightly better photorealism or faster diffusion sampling, this model pushes toward something deeper: inherent, native editability inside the image itself.

Instead of treating an image as a flat and indivisible arrangement of pixels, the Qwen-Image-Layered model attempts to decompose a single raster input into multiple RGBA layers. Each layer contains meaningful visual components, complete with transparency, structural boundaries, and semantic isolation. In practical terms, that means one image becomes a stack of selectively editable elements, more like a Photoshop project file than a traditional JPEG.

For designers, marketers, ecommerce operators, and anyone producing visual content at scale, this direction carries major implications. The more editable an image becomes, the more it can be reused, localized, animated, or monetized. If today’s diffusion models are rendering engines, layered-image models are on track to become fully automated asset factories.

A New Breakthrough: What the Qwen-Image-Layered Model Actually Is ?

The Qwen-Image-Layered model was introduced very recently through a public research paper and an accompanying official blog announcement. Unlike typical generative improvements—resolution upgrades, faster sampling, or better text rendering—this model introduces a conceptual leap in representation.

The core promise is simple to understand but complex to achieve:

- You feed the model a normal, flat, three-channel RGB image(colored image).

- The model outputs multiple RGBA layers(multi-layer colored images with transparent background), each containing objects, details, or visual segments.

- Every layer includes transparency, meaning overlap and occlusion can be reconstructed.

This is dramatically different from conventional segmentation masks. A mask identifies foreground and background regions but does not reconstruct edges, alpha boundaries, or detailed interior structure. A mask cannot restore what is hidden behind an object. The Qwen-Image-Layered model attempts exactly that: semantic decomposition with recoverable occlusion.

The idea has electrified the design and synthesis community because it implies a route to editable digital materials—something high-level creative software has been chasing for a decade.

Before going further, let us clarify availability in transparent terms:

- The paper is public and readable today.

- Developers can test a Hugging Face demo that accepts images and outputs multiple RGBA layers.

- Model weights are available for local experimentation.

- As of this writing, no commercial API has been released.

In other words, the Qwen-Image-Layered model is a major milestone, but it remains part of a research and prototyping ecosystem, not a turnkey production pipeline.

Why Layered Images Matter for Real-World Workflows

Most commercial design work faces the same constraint:

You've got a perfect image and just want to tweak one small detail—but editing it often messes up the rest of the composition, breaking proportions, lighting, or style.

A product photographed on a textured background will cast shadows. Remove the background crudely, and shadows break. Translate a packaging label into another language, and spacing, alignment, and lighting distort. Replace one object with another, and edges look artificial.

Layered images promise a different future. If a single photo can be decomposed into visual layers, each containing transparent alpha structure, creators would be able to:

- Move objects independently

- Change backgrounds without losing shadows

- Recolor or restyle surface textures

- Translate packaging while preserving layout

- Animate foreground objects in video

- Export assets into a commercial system

Layering converts one-time images into infinitely reusable work materials. It turns creative production into a supply chain, not a series of disposable outputs.

Qwen-Image-Layered Availability Check — What You Can and Cannot Do Today

Because excitement often leads to misunderstanding, we will state the current conditions clearly.

What is available today:

- A public research paper detailing architecture and objectives

- A Hugging Face demo where anyone can upload images

- Downloadable weights for advanced users

What is not available today:

- No commercial API for production integration

- No SaaS platforms offering the model as a button

- No scaling workflow tuned for daily creative operations

This reality does not diminish the innovation—it simply signals that adoption will move from research → early developer tooling → commercial channels.

How to Apply “Layer-Based Thinking” Using SuperMaker Today

While the Qwen-Image-Layered model aims to automate semantic decomposition, SuperMaker already provides a range of workflows that simulate the commercial benefits of layered editing—not by magically separating layers, but by giving creators modular control over objects, backgrounds, inpainting regions, and localized changes.

In other words:

You do not need a layered-image model to operate with layered-editing logic.

You only need a tool that supports modular editing steps.

SuperMaker provides stable online AI image generation, background removal, inpainting, prompt-based regional editing, and object-level manipulation—without installation, GPU configuration, or workflow instability.

Below are some concrete workflows that convert “layer thinking” into profitable output.

Workflow 1: Product Photo Background Replacement with Natural Shadows

Product images drive e-commerce conversion, but brands spend thousands of dollars cutting out objects and managing shadow fidelity.

A layered-image mindset solves this problem elegantly.

Using Nano Banana Pro in SuperMaker:

- Upload the product photo.

- Remove the background using prompt:

Abstract the [coffee machine] to Alpha layer.

(Generated By SuperMaker AI Image Maker. )

- Drop the object onto a new environment (Upload two images: the object and new background) .

Put the [coffee machine] in image 1 to the [desk] in image 2.

[(Generated By SuperMaker AI Image Maker. )](#center)

- Adjust shadow strength or details if needed.

- Export for marketplace, ads, or PDP usage.

The commercial payoff:

- Faster A/B creative testing

- Lower studio production cost

- Endless location variability

- Brand control

Workflow 2: Multilingual Packaging & Label Localization

Packaging localization is one of the most demanding creative workflows: English → Spanish / Korean → Japanese / French → Arabic

A layered-image approach isolates the text while preserving brand geometry. If you want to transfer into another language, you don't even need to use translator.

Nano Banana Pro delivers exceptional text rendering right inside SuperMaker:

- Upload a packaging image.

- Enter text prompt to adjust the key information:

Turn the words in image to [Spanish].

Preserve positioning, label curvature, and lighting.

(Generated By SuperMaker AI Image Maker. )

- Export localized variants for regional markets.

Workflow 3: Object-Level Editing for Ads and Social Media

Advertising demands rapid replacement:

- Change the drink in the model’s hand

- Replace the sneakers

- Swap sunglasses

- Remove a distracting prop

SuperMaker AI image generator supports this directly through regional editing and inpainting, enabling creators to treat visual components as logical layers.

Example A: Replace Objects in Product Showcase Images

For ecommerce brands, lifestyle product shots are expensive to reshoot every time a SKU changes. With a layered-editing workflow, a single hero scene becomes reusable creative inventory:

- Upload product images

- Enter text prompt to guide the AI:

Replace the shoes in image 1 with the shirt in image 2.

(Generated By SuperMaker AI Image Maker. )

- Adjust prompt if you are not satisfied with the results

- Download the image.

By treating the object as a virtual “layer,” creators can generate multiple commercial variants from one master photo—ideal for PDP images, Amazon A+ content, DTC landing pages, and paid social ads.

Example B: Change Outfits and Restyle A Model for Fashion Visuals

Fashion marketing demands constant styling refresh, but new studio sessions are costly. With controlled regional editing and inpainting, a model’s outfit can be replaced while preserving pose, lighting, skin texture, and atmosphere:

- Upload existing images,such as model, clothes etc..

- Enter prompt to guide the AI:

Put the clothes in image 2 on the model in image 1, with the blue shirt tied around her waist.

(Generated By SuperMaker AI Image Maker. )

- Adjust small details in prompt if needed.

This workflow mirrors the value of layered-image decomposition: the model becomes one fixed layer, the clothing becomes another layer, and brands unlock rapid styling iteration without physical reshoots.

SuperMaker supports this directly through regional selection, object inpainting, and localized regeneration, enabling creators to operate as if each visual element were already separated into RGBA layers—even before the Qwen-Image-Layered model becomes a production API.

The Strategic Implication — Layered Editing = Money-Making Productivity

The shift toward layered images is not merely a technological milestone—it is a business transformation. Flat graphics tie labor to output. Every new request means:

- redo the visual

- redo the background

- redo the translation

- redo placement

- redo localization

Layer-based thinking converts static work into reusable economic instruments. The value shows up in every commercial context:

- Ecommerce listing optimization

- Paid ad variation

- Creative testing

- Regional market expansion

- Audience personalization

- Content refresh cycles

Time becomes leverage. A single design becomes multiple revenue streams.

The Qwen-Image-Layered model points toward this direction. SuperMaker lets you operationalize it now.

Will SuperMaker Integrate Layered-Image Models in the Future?

Many readers will have a simple question:

Will SuperMaker eventually integrate the Qwen-Image-Layered model? The transparent answer is:

- Today, the model is not exposed as a commercial API.

- It does not yet offer reliability guarantees for production usage.

- No SaaS platforms can legally or technically deploy it at scale.

However—and this part matters strategically—SuperMaker continuously evaluates cutting-edge imaging research. Once two conditions are satisfied:

- The Qwen-Image-Layered model becomes commercially licensable or API-accessible, and

- Its output aligns with SuperMaker’s production workflows for creators and businesses,

SuperMaker would be among the first platforms to integrate such layered-image capability.

That means this technology is not a distant abstraction. It is a future integration path, pending commercial readiness.

Final Words

Layered editing means:

- Higher throughput

- Lower creative friction

- Reusable commercial assets

- Localization without redesign

- Scalable ideation

- Motion-ready inputs

While the Qwen-Image-Layered model reveals what is coming, SuperMaker helps creators gain that advantage today—without installation, without GPU requirements, without waiting for commercial licensing.

The opportunity is simple:

Learn the layered mindset now.

Deploy it with available workflows today.

Step into the future when the model becomes production-ready.

SuperMaker stands ready to help.