Veo 3.2 Is Coming: Google’s Next AI Video Leap Leaked and What It Could Mean

Reliable leak: Google Veo 3.2 already in some services, coming to Workspace. Explore Veo evolution, rumored improvements, AI video impact in 2026.

The excitement in the AI community is palpable right now: Google's Veo 3.2, the next iteration of their groundbreaking text-to-video (and image-to-video) generation model, appears to be on the verge of release. As someone deeply immersed in the rapid evolution of generative AI tools, I've been following this closely, and the signs point to something big happening very soon—possibly within days or weeks from January 20, 2026.

A Quick Recap: The Journey to Veo 3.2

Google DeepMind's Veo series has been one of the most impressive leaps in AI video generation. Veo 3, unveiled in mid-2025, introduced native audio generation—synchronized dialogue, sound effects, and ambient noise—alongside stunning realism in physics, human motion, and prompt adherence. It felt like the moment AI video truly entered the "talkies" era.

Then came Veo 3.1 (rolled out progressively through late 2025 into early 2026), which addressed real-world production needs:

- Support for vertical (9:16) formats, perfect for YouTube Shorts, TikTok, and mobile-first content.

- Enhanced "Ingredients to Video" mode, allowing better consistency when blending reference images, characters, objects, or textures.

- Higher resolution options, including native 1080p and upscaled 4K outputs (though base generation remains at 720p in many interfaces like the Gemini app).

- Deeper integration across Google's ecosystem: Gemini app, Google Flow (the dedicated filmmaking tool), YouTube Create/Shorts, Vertex AI, and the Gemini API.

These updates made Veo more practical for creators, marketers, educators, and enterprises. Pricing on Vertex AI reflects the premium tier—around $0.20–$0.60 per second depending on resolution and audio inclusion—while consumer access via Gemini Pro/Advanced or free trials keeps it relatively democratized. You can also experience Veo models conveniently through platforms like SuperMaker AI.

Official pages (DeepMind's Veo site and recent Google AI blogs) still highlight Veo 3.1 as the flagship, with the latest public announcement around mid-January 2026 focusing on those creative control and resolution enhancements. No formal Veo 3.2 blog post or changelog exists yet.

The Leaks and Rumors Fueling the Hype

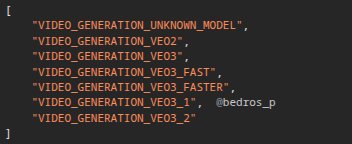

The buzz really ignited on January 18, 2026, when reliable Google internals leaker @bedros_p posted a screenshot showing "Veo 3.2 has made its way into some services - to be added to Workspace." The image appeared to come from backend configs or internal dashboards, and the post quickly gained traction (dozens of likes, reposts, and quotes within hours).

Community reactions exploded:

- Multiple AI enthusiasts and developers reposted with comments like "Veo 3.2 release imminently" and "Looks like we're getting it soon 🥳."

- Speculation ties it to codenamed checkpoints spotted on third-party AI evaluation platforms (e.g., Artificial Analysis) since late December 2025: models labeled "Sicily," "Sisyphus," and most recently "Artemis." Artemis, in particular, has shown strong image-to-video results in leaked demos, with smoother motion, better adherence to complex prompts, and hints of extended clip lengths or improved multi-shot consistency.

- Integration hints suggest Workspace (Google's productivity suite) will get direct access—potentially via Google Vids, Docs, or Slides—for quick video embeds in presentations, training materials, or marketing collateral. This aligns with Google's push to make AI-native content creation seamless in enterprise workflows.

While nothing is officially confirmed, the pattern matches how Google rolls out updates: internal testing → leaks from miners like @bedros_p → gradual rollout to select users/API → full public announcement.

What Might Veo 3.2 Bring?

Based on trends, community speculation, and the trajectory from 3.0 to 3.1, here's what people are hoping for (and what seems plausible):

- Even stronger consistency and control — Better character/object persistence across longer sequences or multiple shots.

- Extended duration — Veo clips are currently short (~8 seconds); pushing toward 15–30 seconds would be huge for storytelling.

- Advanced audio improvements — More natural multi-speaker dialogue, emotional intonation, or music syncing.

- Faster/cheaper generation — Optimizations for "Veo 3 Fast" modes or lower-latency previews.

- Broader ecosystem rollout — Deeper ties to Android XR (for immersive previews), Gemini 3 reasoning (for smarter prompt auto-refinement), or even direct exports to professional editing tools.

- Competitive edge — Google likely wants to leapfrog again in realism, physics simulation, and creative fidelity.

Of course, these are educated guesses. Google tends to under-promise and over-deliver on quality jumps. For hands-on access to the latest Veo capabilities (including Veo 3.1 today and likely future updates), check out SuperMaker AI's Veo integration.

Why This Matters (My Personal Take)

Tools like Veo aren't just tech demos—they're reshaping content creation. Filmmakers can prototype scenes in minutes, marketers test ad variants at scale, educators build dynamic explanations, and hobbyists turn wild ideas into polished shorts. The barrier to "cinematic" video is evaporating, raising both exciting possibilities (democratized storytelling) and valid concerns (deepfakes, job displacement in VFX/editing).

As a follower of these developments, witnessing Veo evolve from Veo 3's audio breakthrough to 3.1's production polish has been thrilling. If 3.2 delivers as leaked, it could cement Google's lead in practical, high-fidelity generative video for 2026. Platforms like SuperMaker AI make exploring these advancements even easier and more accessible.

Looking Ahead

Keep an eye on:

- Gemini app / Google Flow updates (new features often appear first for subscribers).

- DeepMind blog or Google AI announcements.

- Vertex AI / Gemini API changelogs for model version bumps.

- Trusted leakers like @bedros_p for early signals.

If/when Veo 3.2 drops, I'll be diving in immediately—generating test clips, comparing outputs, and sharing results. This space moves fast, and 2026 is shaping up to be the year AI video goes mainstream. Ready to try Veo-powered creation yourself? Head over to SuperMaker AI to get started.

What are you most excited (or worried) about with the next wave of video AI? Drop your thoughts below—I'd love to discuss!