LingBot-World: The Dawn of Interactive Digital Simulators Built on Wan

Unlike standard video models that produce a linear clip from a prompt, LingBot-World generates a video stream that responds to user inputs in real-time.

In the rapidly evolving landscape of Artificial Intelligence, the boundary between "generating a video" and "simulating a world" has finally been breached. On January 28, 2026, Robbyant, the embodied AI division of Ant Group, officially open-sourced LingBot-World. This revolutionary model is not just another video generator; it is a real-time, interactive world simulator that allows users to explore and manipulate virtual environments with unprecedented fidelity.

What makes LingBot-World particularly fascinating to the developer community is its lineage. The project is strategically built upon the foundations of the Wan model, leveraging its robust spatiotemporal coherence to create something entirely new: a controllable, "explorable" digital reality.

What is LingBot-World?

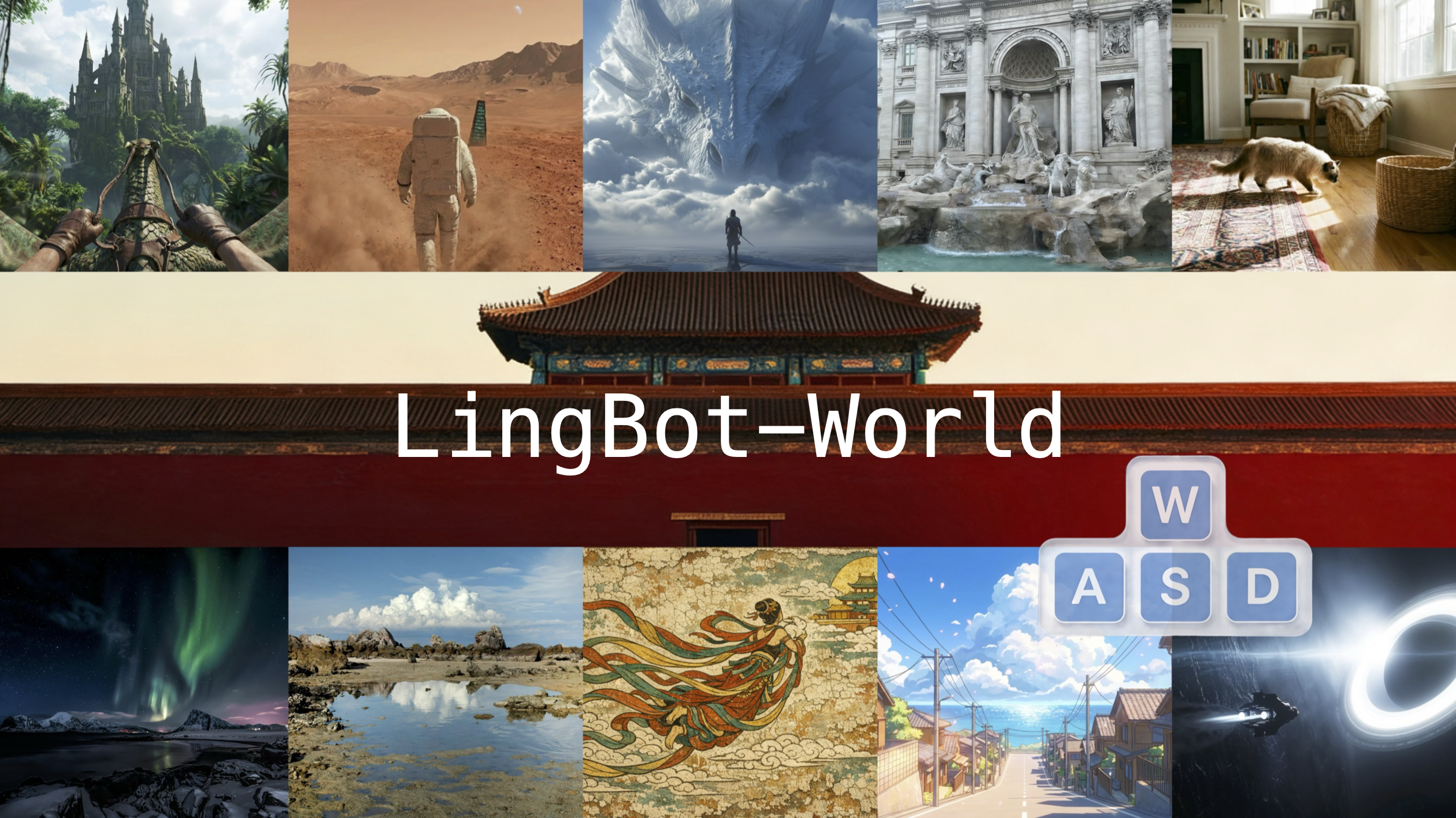

LingBot-World is an open-source world model designed to act as a "digital twin" of both real and imaginary spaces. Unlike standard video models that produce a linear clip from a prompt, LingBot-World generates a video stream that responds to user inputs in real-time. Whether it is navigating a fantasy forest or driving through a bustling cyberpunk city, the model maintains consistent physics and geometry.

By building on the architectural breakthroughs of the Wan model, the Robbyant team has managed to solve one of the biggest "holy grails" of AI: Long-term consistency. In LingBot-World, if you turn the camera away from a building and then turn back three minutes later, the building is still there, exactly as you left it.

Technical Foundations: The Power of Three-Stage Training

The development of LingBot-World followed a rigorous three-stage evolutionary pipeline. This methodology ensured that the model didn't just "look" good, but "behaved" logically.

1. Pre-training: Establishing the Visual Prior

The model began by mastering the art of visual representation. By utilizing the massive video-text datasets and diffusion transformer (DiT) techniques found in the Wan model, LingBot-World established a "General Video Prior." This stage provided the model with the ability to render complex textures, lighting, and motion with cinematic quality.

2. Middle-training: Injecting Interaction

In the second stage, the team transitioned the model from a passive generator to an active simulator. They introduced a specialized data engine that combined:

- Real-world Ego-perspective Videos: To learn natural motion.

- Action-annotated Game Data: To understand how "WASD" keys correlate with environmental movement.

- Unreal Engine Synthetic Data: To provide "ground truth" for perfect camera trajectories.

3. Post-training: Optimization for Real-Time

To achieve a playable frame rate, the model underwent distillation and causal attention optimization. This allows LingBot-World to run at approximately 16 FPS, making it feel like a cloud-rendered video game rather than an AI inference task.

Why "World Models" Matter for the Future

The release of LingBot-World marks a shift from AI that imagines to AI that simulates. This has profound implications for several industries:

- Embodied AI & Robotics: Robots can now "dream" of their environments before they ever step into the real world. By training in a LingBot-simulated environment, a robot can learn to navigate complex obstacles without the risk of damaging physical hardware.

- Game Development: Imagine a game where the world isn't pre-rendered by artists but generated on-the-fly by a model derived from the Wan model framework. This could lead to infinite, personalized gaming experiences.

- Autonomous Driving: Simulating "edge cases"—like a child chasing a ball into a foggy street—becomes safer and more scalable when the world model can generate these scenarios realistically with precise control.

The Open-Source Impact

Perhaps the most significant aspect of LingBot-World is its commitment to the open-source community. By releasing the weights and code on GitHub and Hugging Face, Robbyant is challenging the dominance of closed-source models like Google's Genie 3.

The accessibility of LingBot-World allows researchers to build on top of a "living" simulation. Developers can now fine-tune the model for specific domains, such as medical simulations or architectural walkthroughs, all while benefiting from the core strengths inherited from the underlying Wan model architecture.

Conclusion: A New Era of Interaction

LingBot-World is more than just a technical milestone; it is a glimpse into a future where the line between the digital and the physical is increasingly blurred. By combining the high-fidelity video generation capabilities of Wan with real-time interactive controls, Robbyant has provided the community with a powerful tool for the next generation of AI applications.

As we move forward, the "World Model" will likely become the standard interface for how we interact with machines. Whether you are a researcher, a gamer, or a creator, the world is now literally at your fingertips—and it's open for exploration.